What is Hive?

What is Hive?

When it comes to working with bulk amount of data as in Big Data, Hadoop plays an important role. Hadoop is a conglomerate of many tools and components that help a data scientist to work efficiently. Apache Hive is one such tool of Hadoop eco system that is exclusively used for open source data warehousing. It performs querying and analyzing of huge sets of data stored in Hadoop with a perfect ease.

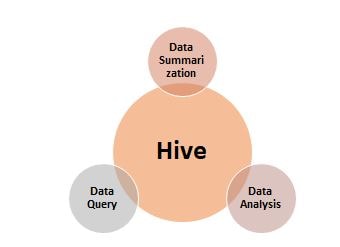

What can you do with Hive?

Hive is a server side deployable tool that supports structured data and has a JDBC and BI integration. It is a query language like SQL and is used for data analysis and report creation. It is a data warehousing tool that is built on the top of Hadoop Distributed File System (HDFS). It is designed to perform key functions of data warehousing that include encapsulation of data, working and analysis of huge datasets, and handling ad-hoc queries.

With Hive, it is possible to create work with structured data stored in tables. You can manage and query such data comfortably using Hive.

In case you want to improve, query performance in Hadoop’ programming, Hive can help. With the help of its directory structures, you can partition data and improve query performance.

In short, you can perform the following functions using Hive:

The core component of Hive that lets it work extensively on datasets is HiveQL. It lets Hive to translate the SQL-like query into MapReduce and make it deployable on Hadoop.

Where can you use Hive?

- You can use Hive in data analysis jobs where you have to work with batch jobs, but not web log data or append only data.

- If you are working with Map Reduce, you must have noticed that it does not have optimization and usability. In such cases, you can choose Hive.

- A beginner learning to work with databases can choose Hive SQL first. This is because Hive does not have the complexity that is present in Map Reduce. This makes Hive a beginner friendly tool too.

- Hive suits best in cases where schema design flexibility, data serialization and de-serialization are needed.

- Hive is an excellent ETL (Extract, Transform, Load) tool that can be used for data analysis systems which need extensibility and salability.

- You can choose Hive when you need to work on any of the following four types of data format: TEXTFILE, SEQUENCEFILE, ORC and RCFILE (Record Columnar File).

Important features of Hive

- Hive’s command line interface (CLI) lets you to interact with it. You can write Hive queries in Hive Query Language(HQL) through this CLI.

- Though the name HQL sounds similar to SQL, unlike SQL that works on a traditional database, HQL works on Hadoop’s infrastructure and executes its queries here.

- Metastore is an important part of Hive that lies in a relational database and lets users to store schema information.

- WebGUI and JDBC interface are two methods that let you interact with Hive.

- Hive creates tables and databases and later loads data into them.

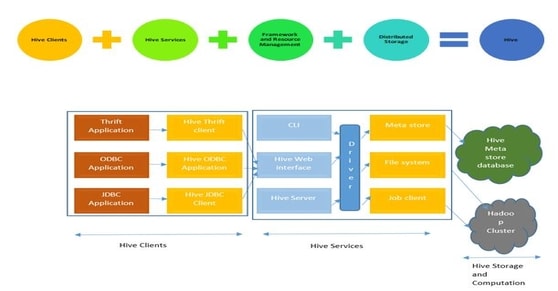

What does Hive’s architecture comprise of?

Hive’s architecture mainly comprises of four major components as shown in the diagram below:

Let us talk about each of them in detail now:

Hive Clients

Languages that in which a Hive client application can be written is termed as Hive client. Through JDBC, ODBC drivers and Thrift, Hive supports C++, Python and Java. Hence these languages can be considered as Hive clients.

Hive supports three types of clients:

- Thrift clients, those languages which can support Thrift.

- JDBC clients – All java applications that connect to Hive using JDBC driver.

- ODBC clients: All applications that can connect to Hive using ODBC protocol.

Hive Services

Those applications from which we can make a query to Hive are called as Hive Services. Web interface and command line interfaces come under Hive Services. Various Hive services are discussed as under:

Command Line Interface:

This is the one you use to run your Hive queries and this is the default service in Hive.

Web Interface:

You can use this to generate and run Hive queries and commands using this web interface.

Hive Server:

It lets you to send requests to Hive and obtain the result. As it is built on Thrift server, it is called as Thrift server, too.

Hive Driver:

When you submit a request to Hive server, this is the one responsible for receiving it. The Hive Driver receives the Hive client queries submitted via Thrift, Web UL interface, JDBC, ODBC, or CLI.

Metastore:

Metastore is an important component of Hive that forms the crux of its repository. This plays a key role in clients accessing the required information. It stores the schema and the location of Hive tables and partitions in a relational database.

Three steps are involved in the processing of a Hive query: compilation, optimization and execution.

- During compilation, the Hive driver parses, type checks and makes semantic analysis of the query that has been submitted.

- Then a Directed Acyclic Graph of MapReduce and HDFS tasks is created as a part of optimization.

- Finally, when both the above steps are successfully done, execution takes place in Hadoop.

Framework and resource management

All the processing and execution of queries takes place here using Hadoop MapReduce framework.

Distributed Storage

Hive uses Hadoop distributed File storage system. This is obvious due to the fact that Hive has been built on Hadoop.

One important point to note with respect to Hive is the disk storage for Hive metadata is different from HDFS storage.

What advantages does Hive offer?

Data warehousing is nothing but a method to report and analyse the data. A data warehousing tool inspects, filters, cleans, and models data so that a data analyst arrives a proper conclusion. Apache Hive does a lot more than this.

Hive has lot more advanced features compared to its predecessor RDBMS.

- A user can define functions easily in Hive than in traditional DBMS.

- While it is little trickier to work on complex analytical processing in normal databases, Hive simplifies working with such data formats.

- By easing the querying, analysing and summarizing of data, Hive increases efficacy of work flow and reduces cost too.

- Hive’s HQL is flexible and offers more features for querying and processing of data.

- It is easy for a data scientist to convert Hive queries into RHive, RHipe or any other packages of Hadoop.

- The response time that Hive takes to process, analyse huge datasets is faster compared to RDBMS, because the metadata is stored inside an RDBMS itself.

- Hive is learner friendly and even a beginner to RDBMS can easily program in Hadoop as it eliminates complex programming that is present in MapReduce.

Top reasons why you have bright future with Apache Hive

- Corporate companies that are into big data are using Hive to look at the data because they are already established with SQL and data warehouse concepts.

- Hadoop offers excellent solutions to Big Data problems, and compared to Apache Pig or MapReduce, the future for Apache Hive is very positive.

- Experts see Hive as the future of enterprise data management and as one stop solution for all the business intelligence and visualization needs.

- Besides data scientists, Hive works very well for developers too. It has all the features to code for new data architecture projects and new business applications.

- Hive is beginner friendly and any software enthusiast with basic knowledge of SQL can learn and get started with Hive programming very easily.

- Hive is witnessing a great future and has many use cases that are rapidly coming up, which means a better scope of opportunities for Hive developers.

- In India, the average salaries for Hive programmers is around Rs. 8 lakhs, which just gets much better experience.

Shape your career with Hive and get trained in Hive technology from GangBoard, the expert in IT and Programming Training and Certification.

+1 201-949-7520

+1 201-949-7520 +91-9707 240 250

+91-9707 240 250