What is an Artificial Neural Network?

What is an Artificial Neural Network?

You will definitely come across this term or a related term every once in a while across various platforms. So, why are they so common now a days? How did they get so popular?

Artificial neural networks have been around since 1940s. But they have gained popularity due to various reasons like advent of a loss optimization techniques like back propagation, availability of GPU’s, and large amounts of data.

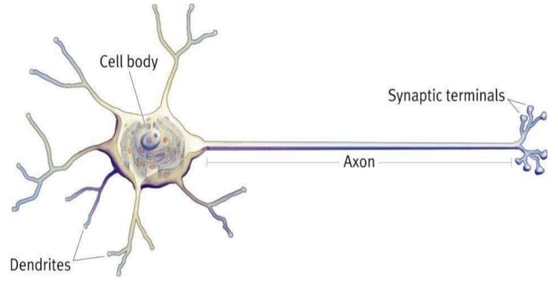

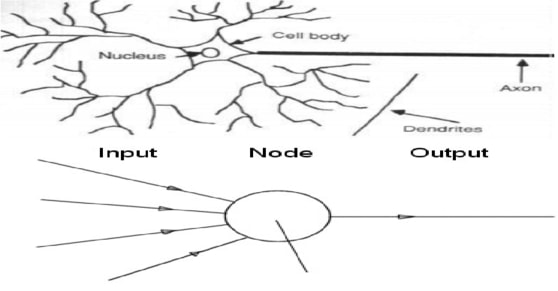

Artificial neural networks, commonly abbreviated as ANN, and sometimes also termed as perceptrons are a mathematical imitation of a biological neural network of animals. The artificial neural networks are inspired by the biological neural network and its constituent, i.e. Neuron. However they are not exact replica of the biological representations.

Agenda:

Artificial Neural Networks

Artificial Neural Networks, in general is a biologically inspired network of artificial neurons configured to perform specific tasks. The neural network itself is not an algorithm, but rather a framework for many different machine learning algorithms to work together and process complex data inputs.

The ANN’s are made up of (artificial) neurons. The initial aim of artificial neural network was to completely replicate a biological neural network. However, overtime, the aim deviated to addressing specific problems using ANN’s rather can replicating a biological neuron/ network. The reason why this has happened is likely due to the difficulty in replicating a biological neuron. The biological neuron is quite complex to replicate as a mathematical function. Hence a simplified version is being used.

At some point of time, in case we are able to replicate a biological neuron completely, we might achieve tasks that are strongly believed to be possible only by a human/animal.

However, these revolutionary mechanism are enabling us to perform complex tasks which was impossible a few decades ago. Due to the advent of computational power, huge amount of data we are even able to create ANN’s ourselves on our local machines.

The ANN’s can be used instead of the traditional algorithms for classification, clustering, forecasting, etc. The ANN’s tend to significantly outperform the traditional algorithms as we start dealing with audio, video, images, and text. Remarkable progress has been made by making use of ANN’s to cater to tasks like language translations, speech recognition, computer vision, gaming and more.

The buzz word now a days in the data science community is deep learning. Let’s discuss how deep learning is different from artificial network.

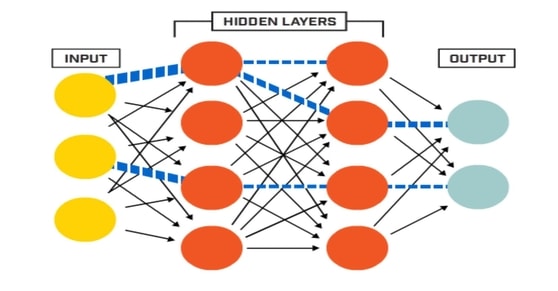

Deep learning is one of the machine learning methods built using artificial networks. In simple terms, a complex version of an artificial neural network is a deep learning network. One of the noticeable difference between them is that the number of hidden layers are much higher in deep learning networks when compared to simple artificial neural network.

The deep learning methods can also be termed as deep structured learning or hierarchical learning. The usage of the artificial neural networks or a deep learning network can be for supervised, unsupervised or semi supervised.

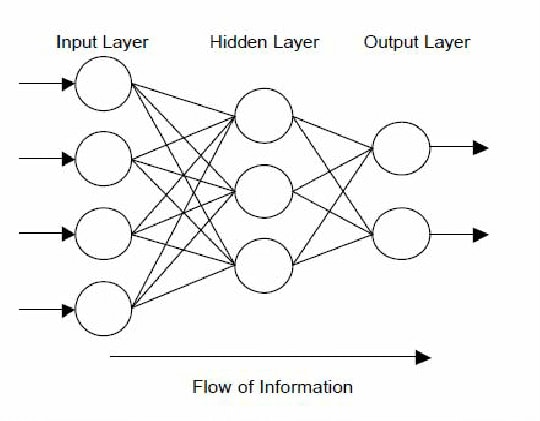

Let us look at the structure of a simple artificial neural network.

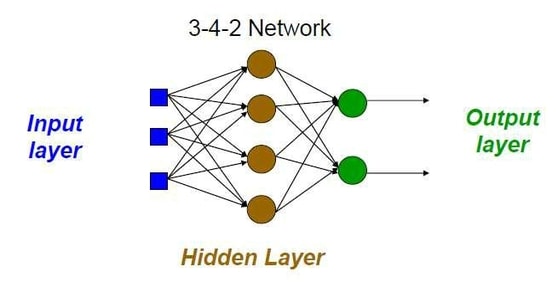

Depending on number of features, i.e. columns, the number of nodes are directly related. As the number of features increase, the number of nodes in the input layer increase. Next comes the hidden layer. It consists of artificial neurons that are interconnected to the nodes of the input layer. The number of hidden layers is not limited to one, but can be varied as required. Usually as the complexity of the problem increases, the hidden layers tend to increase. In a deep learning network, the number of hidden layers tend to be larger.

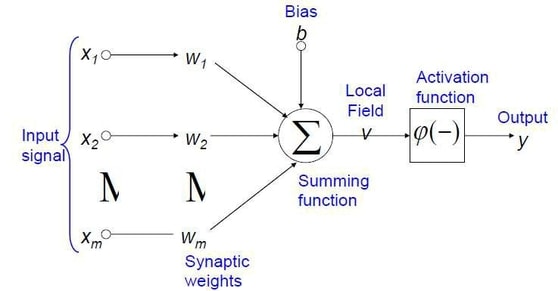

The function of the nodes in the hidden layers is to take the input values and process them and give out an output to the output layer. So, what happens in each neuron or node? The nodes or neurons are mathematical functions that can act in a linear or nonlinear fashion and pass the outputs to the output layer.

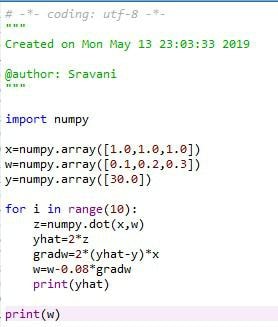

Neural Networks learn from examples. Some important points to discuss about

ANN:

No requirement of an explicit description of the problem No need for a programmer. The neural computer adapts itself during a training period, based on examples of similar problems even without a desired solution to each problem. After sufficient training the neural computer is able to relate the problem data to the solutions, inputs to outputs, and it is then able to offer a viable solution to a brand new problem. Able to generalize or to handle incomplete data.

Introduction to Neurons

An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal from one artificial neuron to another.

Neurons Vs Node:

An artificial neuron that receives a signal can process it and then signal additional artificial neurons connected to it. Artificial neurons and edges typically have a weight that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. The output at each node is called its activation or node value.

Single Neuron Model

Activation Functions

Activation Functions introduce non-linear properties to Neural Network.. There are 2 types of Activation Functions:

- Linear Activation Functions

- Non Linear Activation Functions

Neural Network Architecture

Types of Neural Networks

- FeedForward ANN

- Feedback ANN

The Architecture of a neural network is linked with the learning algorithm used to train.

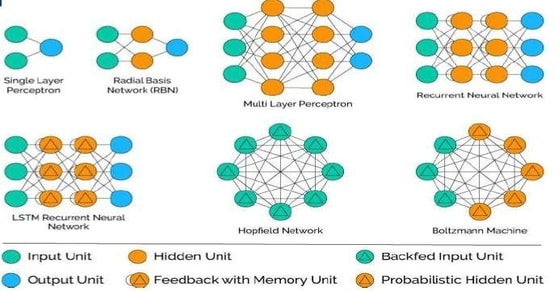

There are three basic different classes of network architectures:

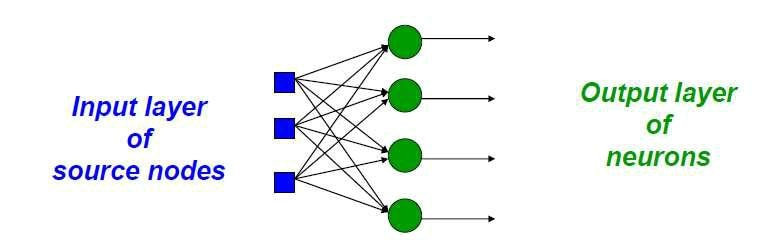

- Single-layer feed-forward Neural Networks

- Multi-layer feed-forward Neural Networks

- Recurrent Neural Networks

Single-layer feed-forward Neural Networks:

Neural Network having two input units and one output unit with no hidden layers is called Single-layer feed-forward Neural Networks. These are also known as “Single layer perceptrons”.

Multi-layer feed-forward Neural Networks:

Neural Network having more than two input units and more than one output units with N number of hidden layers is called Multi-layer feed-forward Neural Networks.

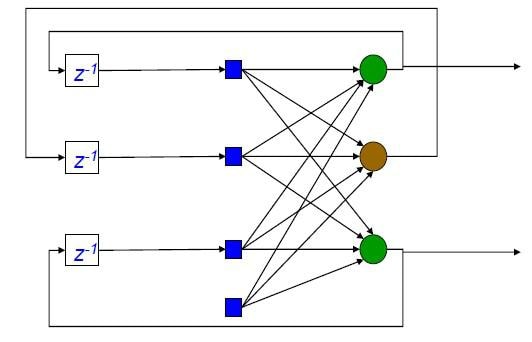

Recurrent Neural Networks:

The Recurrent Neural Network works on the principle of saving the output of a layer and feeding this back to the input to help in predicting the outcome of the layer.

Z-1 is the unit delay operator which implies dynamic system.

Applications, Advantages and Limitations

Advantages:

- A Neural Network can perform tasks that a linear program cannot.

- When an element of neural network fails, it can continue without any problem by their parallel nature.

- A Neural Network learns and doesn’t need to be reprogrammed. It can be implemented in any application.

- It can be performed in any application.

Limitations:

- The Neural Network needs the training to operate.

- The architecture of a Neural Network is different from architecture of microprocessors, therefore, needs to be emulated.

- Requires high processing time for large Neural Networks.

Applications:

| Application | Architecture/Algorithm | Activation Function |

| Process modeling and Contorl | Radial Basis Network | Tan-Sigmoid Function |

| Machine Diagnostics | Multilayer Perceptron | Logistic Function. |

| Portfolio Management | Classification Supervised Algorithm | |

| Target Recognition | Modular Neural Network | |

| Medical Diagnosis | Multilayer Perceptron | |

| Credit Rating | Logistic Discriminant | |

| Analysis with ANN, | ||

| Support Vector Machine |

+1 201-949-7520

+1 201-949-7520 +91-9707 240 250

+91-9707 240 250